Behavior

The Behavior team at the Allen Institute for Neural Dynamics develops standardized, modular behavioral platforms for head-fixed mice, enabling large-scale studies of brain function during learning and adaptive behavior. We focus on behaviors that reveal how interacting neural systems in the brain allow individuals to learn and adapt across contexts and timescales. Our paradigms combine sensory stimuli—auditory, visual, and olfactory—with natural movements such as licking, locomotion, and forelimb reaching. These tasks are designed to integrate with neural recording and perturbation methods, allowing systematic, targeted measurements across multiple brain regions.

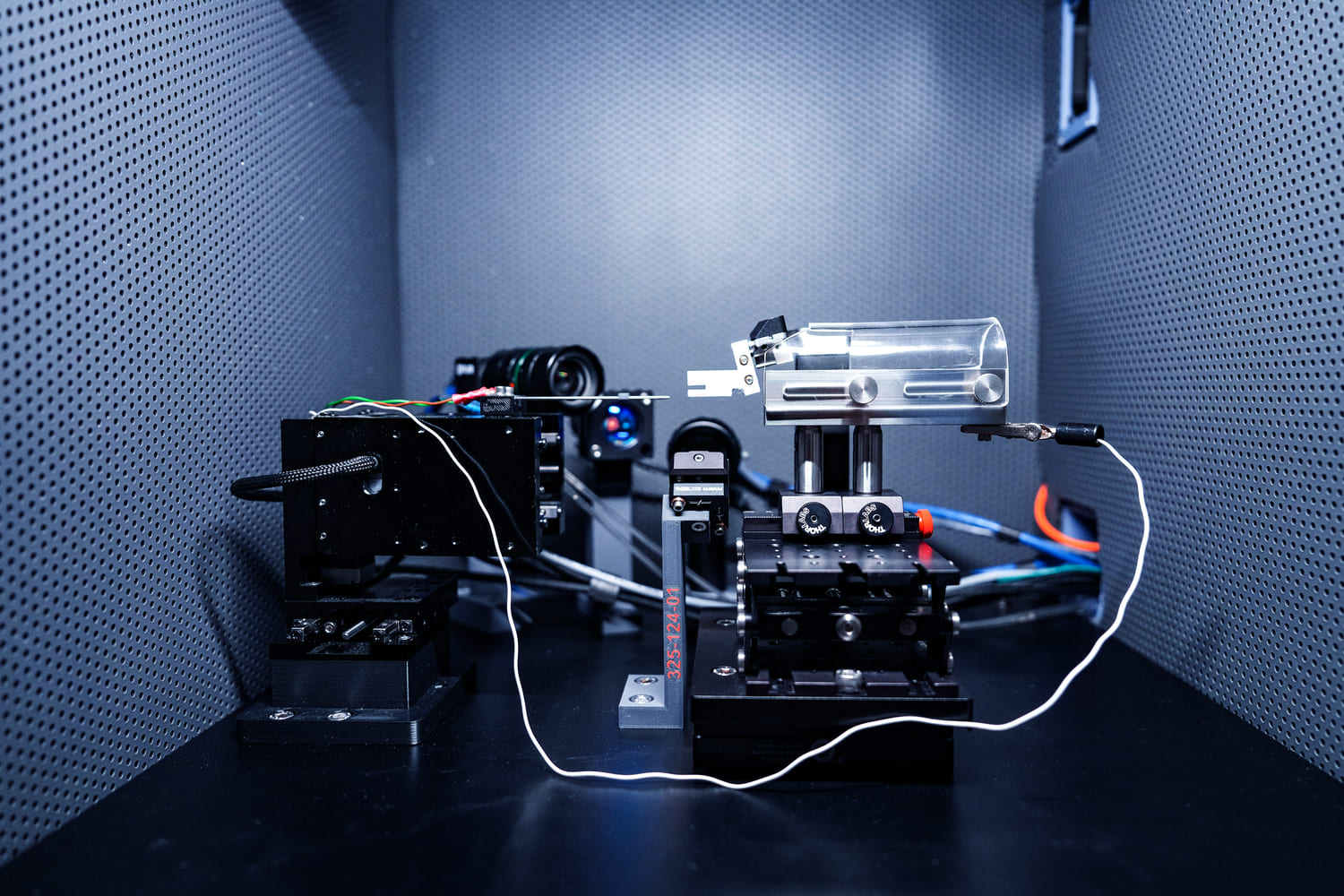

Animals make decisions in constantly changing environments. In this head-fixed, two-choice task, mice forage for water by licking left or right in response to a go-cue. The key challenge: reward probabilities shift dynamically between the two options over time. Mice must track these changes and adapt their choices accordingly, relying on reward prediction errors to update their decision variables. This task provides a simple yet powerful framework for studying how the brain learns and adapts to evolving reward landscapes.

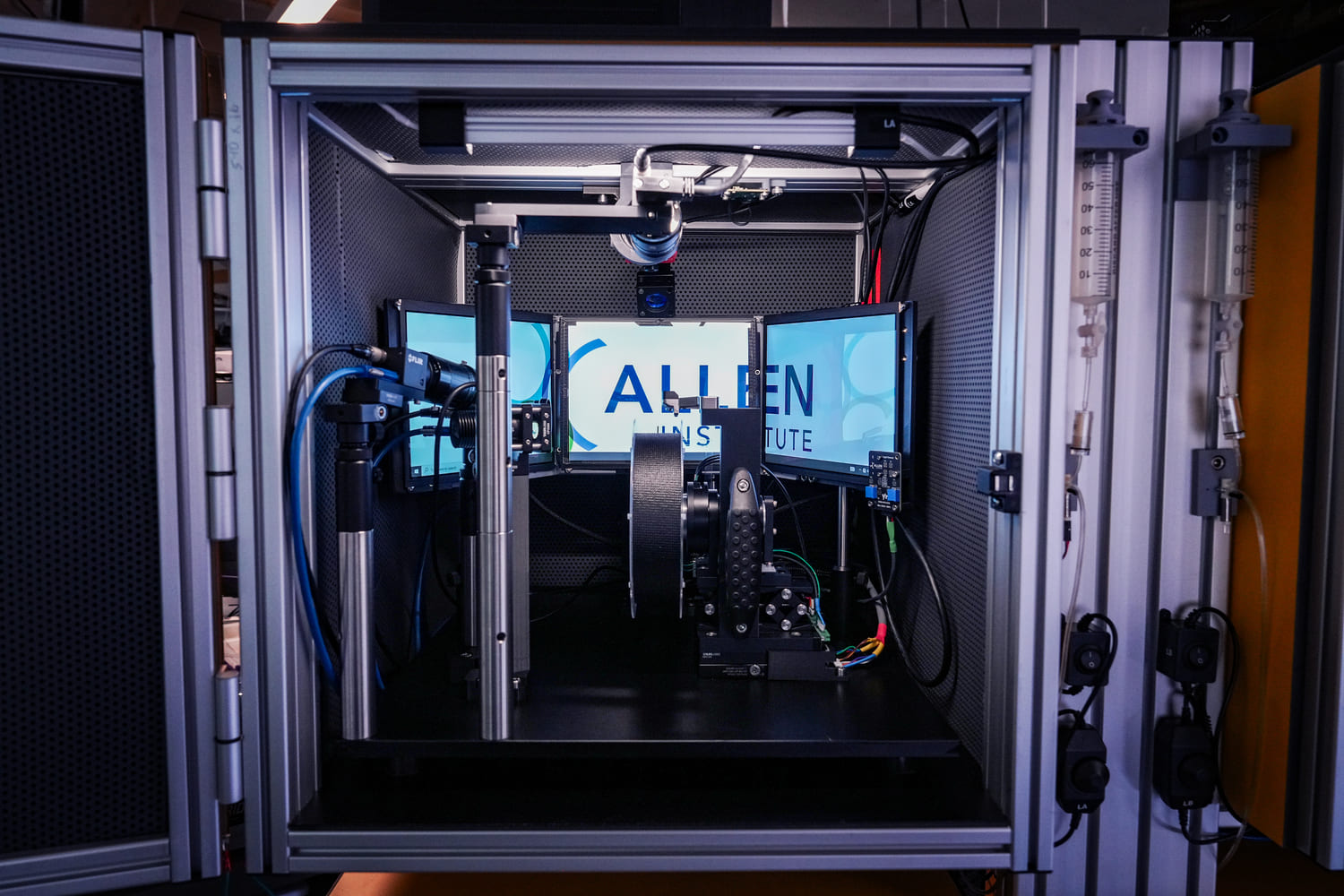

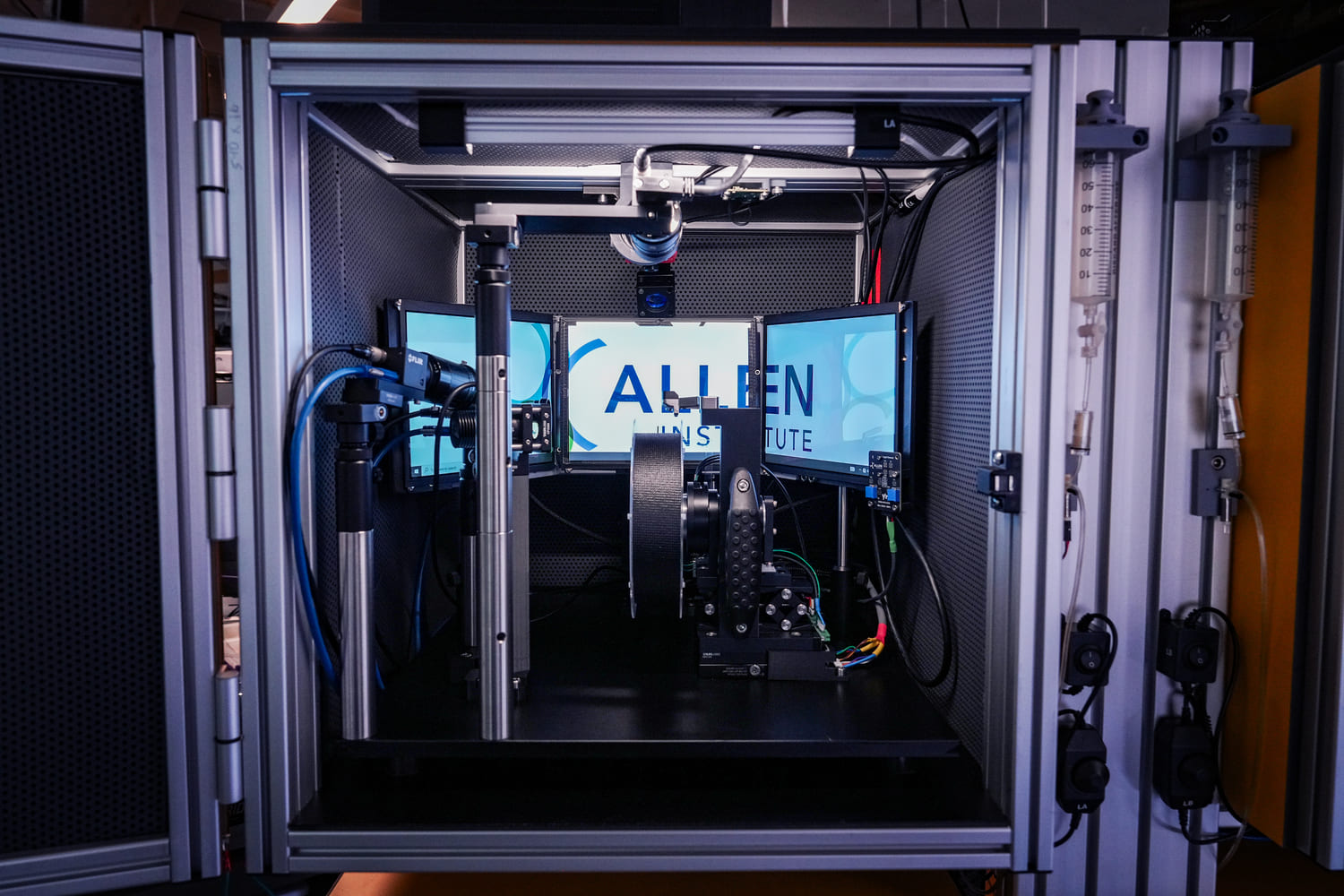

In natural environments, resources cluster in patches that deplete overtime. Efficient foraging requires animals to infer hidden environmentalstructures from experience and adapt their strategy based on sensory cues,prior knowledge, and internal state. We developed an olfactory patch foragingtask in a closed-loop, multi-sensory virtual reality environment where mice interactwith a procedurally generated linear track to make decisions about when to stayor leave depleting resource patches. Virtual reality's flexibility allows us toprecisely control sensory and reward statistics, isolating the neuralmechanisms underlying learning and decision-making.

Using this behavioral paradigm, we investigate how the brain dynamically routes sensory information to guide behavior depending on context. Mice perform a cross-modal switching task in which they alternate between responding to visual versus auditory stimuli across blocks of trials. Importantly, the rewarded modality is not explicitly cued on each trial; mice must instead maintain this information in working memory and use trial outcomes to infer when contingencies have changed. This paradigm allows us to examine how visual and auditory sensory networks are selectively coupled or uncoupled from motor response networks as task demands shift.

Detecting changes in the visual world is a fundamental behavior, and the visual cortex plays a key role in this process. In this go/no-go task, mice view a continuous series of flashed stimuli (e.g., gratings, natural images) and earn water rewards by licking when the identity of the stimulus changes. This flexible paradigm allows us to probe multiple cognitive functions: perception of visual features (orientation, contrast, color, natural scenes), sensory learning, generalization of learned rules to novel stimuli, prediction of temporal sequences, and short-term memory during inter-stimulus delays.

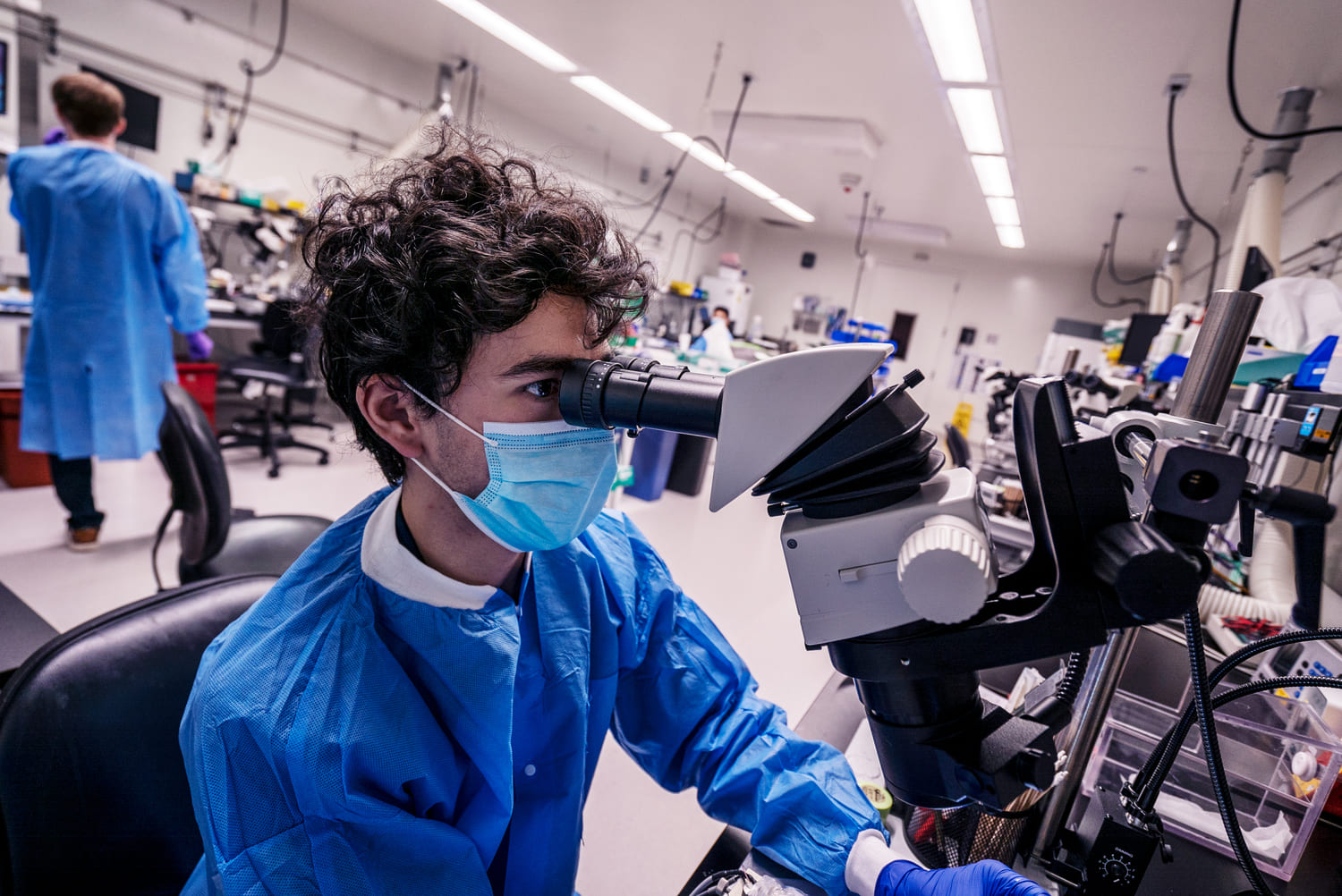

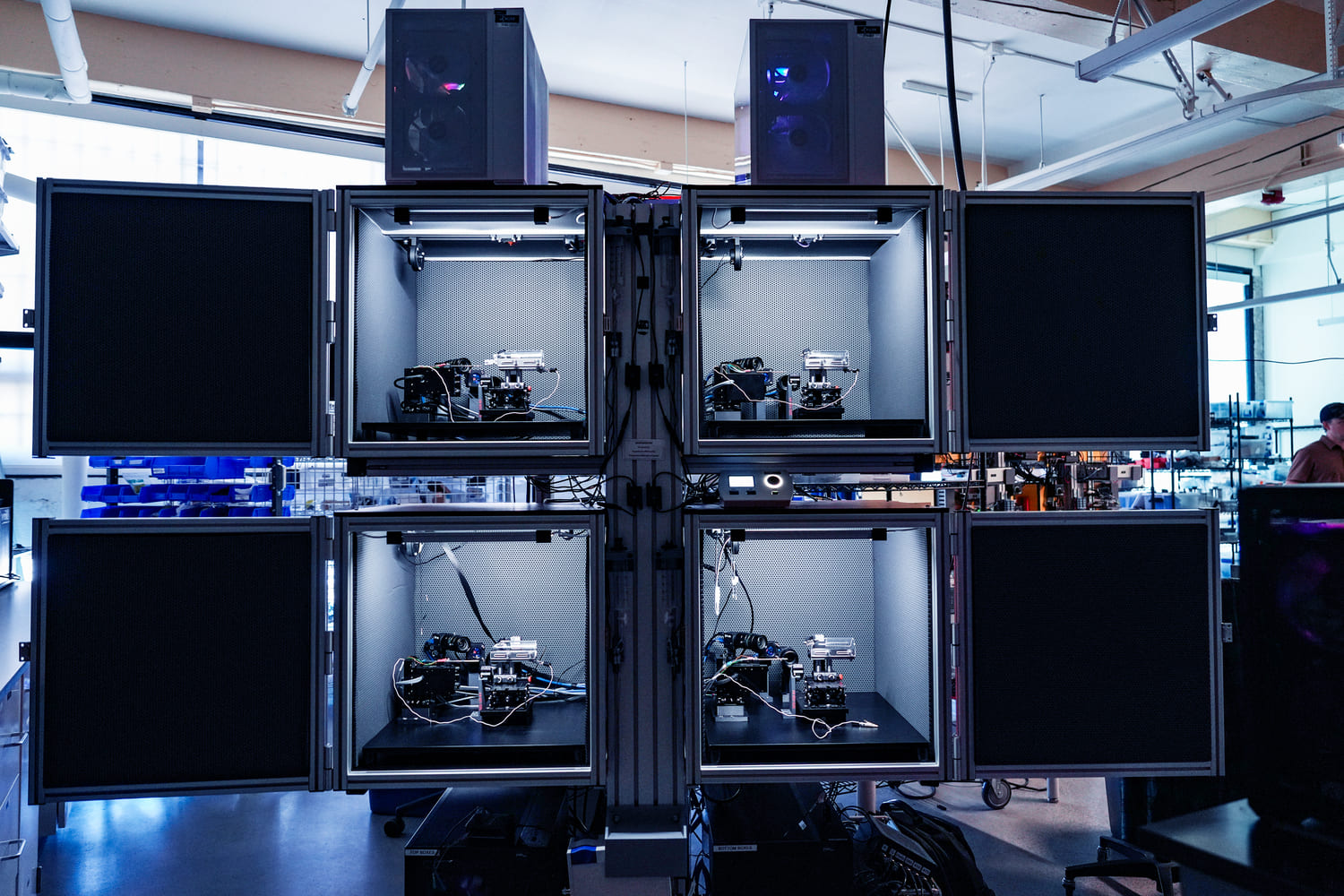

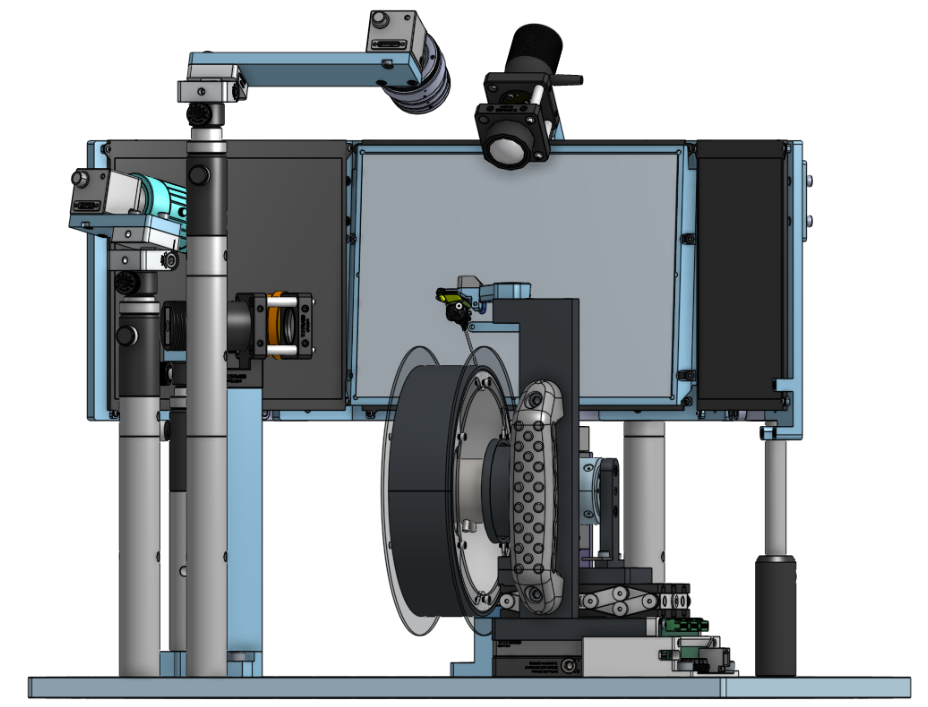

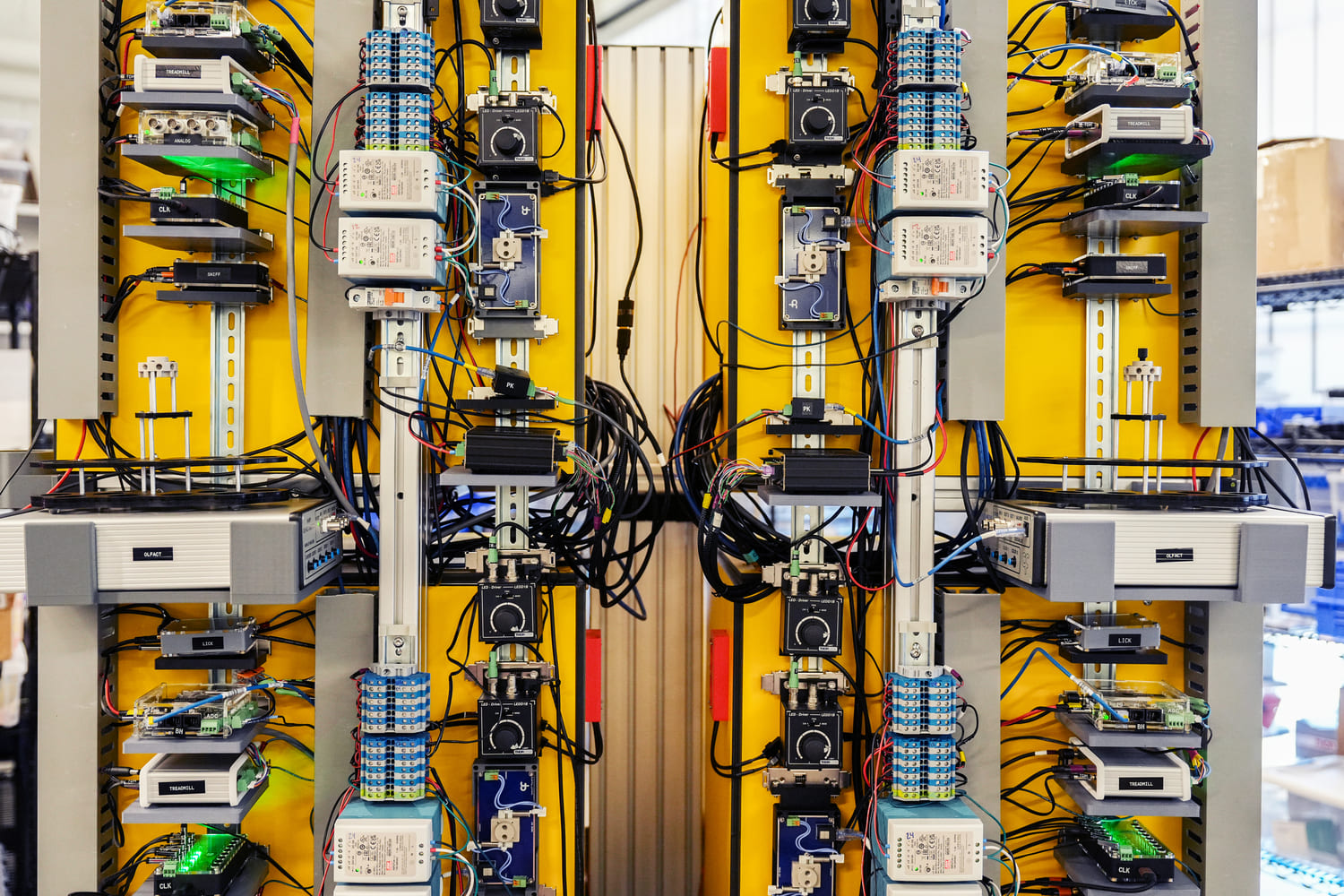

Next-generation neurotechnology enables large-scale neural recordings, and realizing its potential for discoveries in brain function requires equally scalable, reproducible behavioral experiments. Our approach balances standardization with flexibility: uniform hardware rigs, automated training curricula, and streamlined workflows provide structure and coherence, while preserving the adaptability essential for discovery science. This infrastructure support straining of many mice on complex behavioral tasks with consistent data quality across animals, experimenters, and time—enabling systematic, large-scale behavioral neuroscience.

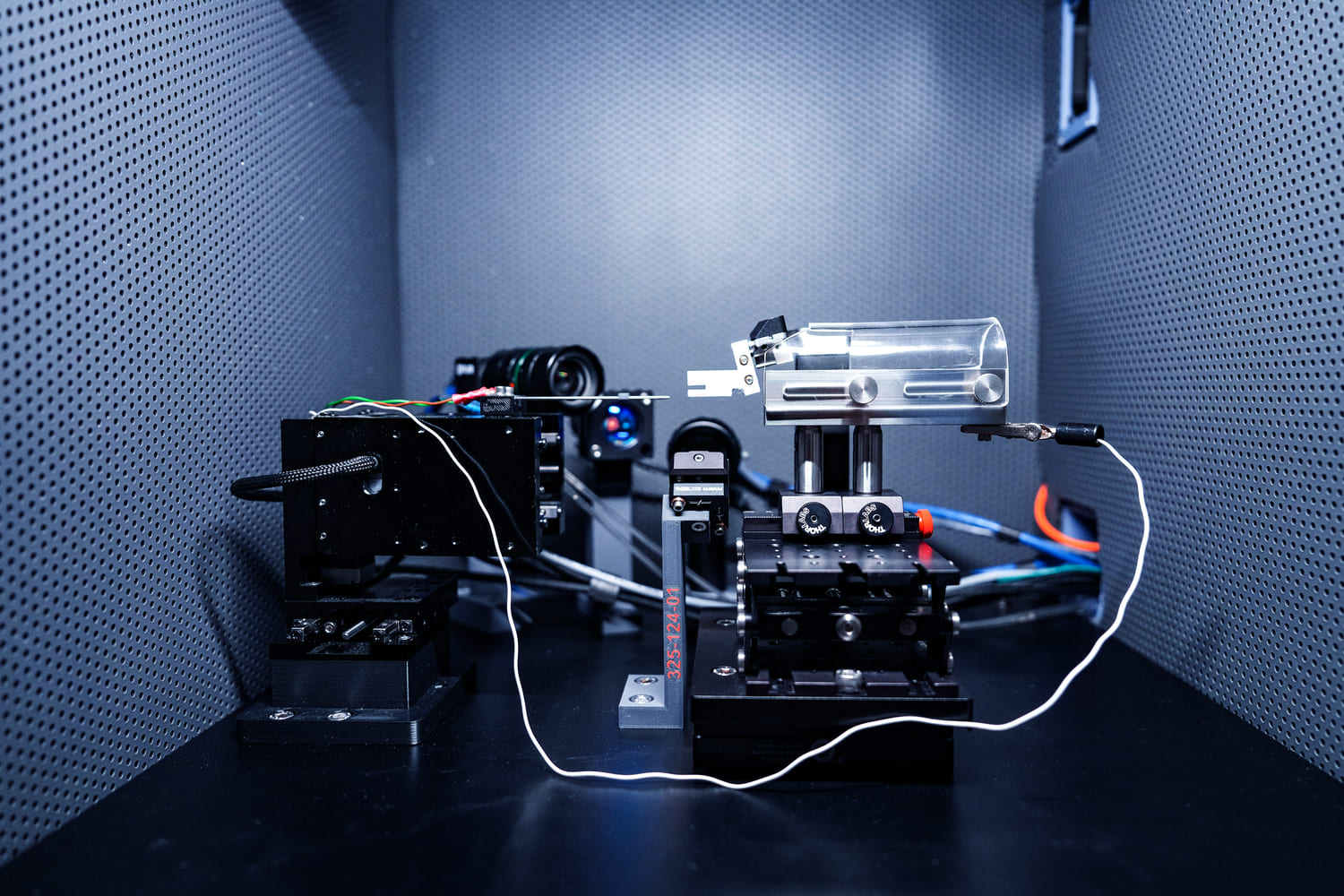

Rapid experimental design is essential for hypothesis-driven discovery. We have developed a modular ecosystem of instrumentation and software architecture built on structured data and configuration formats. These tools are benchmarked for reproducibility and data quality, enabling experimenters to efficiently pilot new tasks and scale successful paradigms. This modular approach facilitates resource sharing, interoperability across experimental and analysis pipelines, and reproducibility—supporting both innovation and rigor at scale.

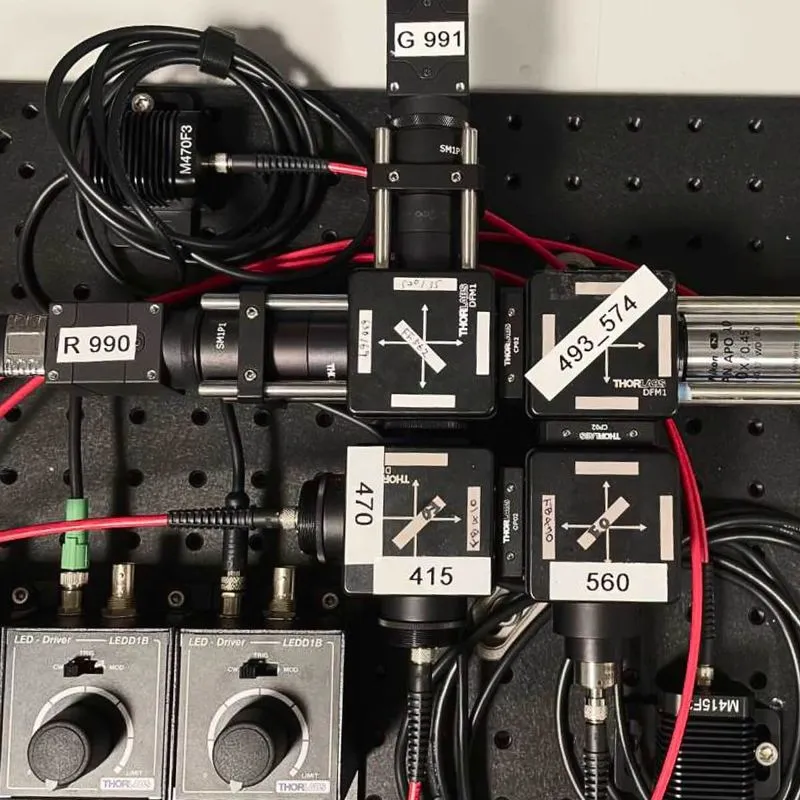

We are active contributors to open science initiatives that are reshaping how behavioral neuroscience is conducted and shared. Our team helps develop and advance two key ecosystems: Harp, an open-source platform forreal-time data acquisition and experimental control, features a lightweight binary protocol, precise hardware synchronization, reusable hardware templates, and streamlined data loading. Bonsai, a reactive visual programming language, interfaces seamlessly with Harp devices. Together, Harp and Bonsai enable rapid prototyping of multi-modal, closed-loop experiments with precise temporal control and efficient parallel data logging. By contributing to these shared tools, we help build a collaborative culture where experimental methods and data are openly accessible to the broader neuroscience community.

The Surgery team offers a variety of aseptic rodent surgical procedures ranging from stereotaxic injections to headpost implantation and cranial windowing.

The Neuropixels platform uses pioneering technology for highly reproducible, targeted, brain-wide, cell-type-specific electrophysiology to record neural activity from defined neuron types across the brain.

The Molecular Anatomy platform combines innovative histology, imaging, and analysis techniques to map the morphology and molecular identity of neuron types across the whole brain.

The Fiber Photometry platform enables optical measurement of neural activity in live animals to study neural circuits' function and dynamics in behaving animals.

The Behavior platform uses advanced technology to implement a standardized, modular, multi-task virtual reality gymnasium for mice, with the goal to study brain function across different behaviors at scale.